The basic idea is:

- Break the problem into subproblems that are smaller versions of the same problem which can be solved independently

- Recursively solve these problems

- Combine the answers

Multiplication

We can multiply 2 n-bit numbers x and y. For now, assume that n is a power of two.

Divide and Conquer Idea: We want to split x and y into their left and right halves where each is bits long. Then, and where each half is bits long and is a left shift by bits.

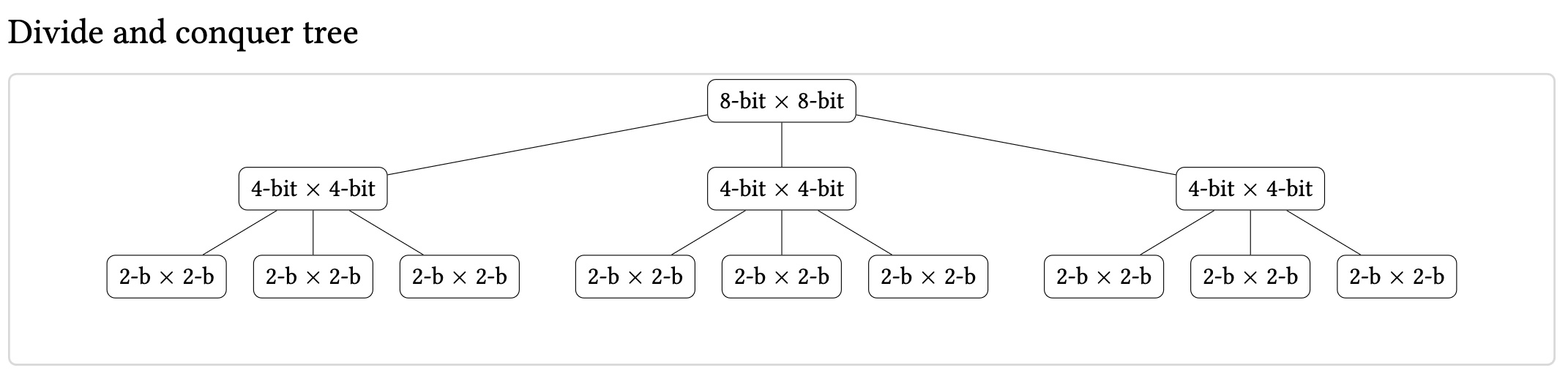

Combining these concepts, we can find that . Therefore, to multiply 2 n but numbers, we can instead multiply these four pairs of bit numbers and combine with three additions and two bit shifts as shown in the above equation.

The runtime of this algorithm is where is the runtime on n-bit numbers. This ends up being . Instead, if we just compute each term without doing the bit-shifting, our runtimes ends up being .

Formal Algorithm

function multDC(x,y)

Input: 2 n-bit positive integers x and y

Output: x*y

if n = 1:

return x*y

x_l = leftmost n/2 bits of x (ceil), x_r = rightmost n/2 bits of x (floor)

y_l = leftmost n/2 bits of y (ceil), y_r = rightmost n/2 bits of y (floor)

P1 = multDC(x_l, y_l)

P2 = multDC(x_r, y_r)

P3 = multDC(x_l+x_r, y_l+y_r)

return 2^(2n/2 floor)*P_1+2^(n/2 floor)(P3-P1-P2)+P2

From this tree we can determine the run-time since the divide and conquer tree has a depth of logn and each level k has nodes, the bottom level has multiplications which after a bunch of log math, ends up being .

Recurrence Relations

We can solve a problem of size n by dividing into a subproblems of size (ceil) and combining their answers in time: .

Masters Theorem

If for some constant , , . Then:

.

See the lecture notes for the proof. But essentially this goes from the bottom levels dominating, to all levels doing the same work, to top levels dominating.

Here, a is the number of subproblems, is the size of the sub problem, and d is the factor of work to combine their answers.

See use in Sorting Algorithms, specifically Merge Sort.